Context-aware HTTP caching

A lot of web applications are a mix of personalized views, views for certain groups of users and views that are the same for all users of the application. Caching all of these views can be a challenge. Luckily reverse proxies like Varnish are specialized in caching web applications, as long as your application talks HTTP the right way.

Basic HTTP caching

There are two important concepts involved in HTTP. The request which contains:

- the request type (

GET/HEAD/POST/PUT/..) - path (

/,/news) - several headers (

Accept,Host,User-Agent) - sometimes a request body

Then there is the response containing headers and optionally a response body. When requesting the frontpage of archlinux.org the request and response look like the following:

~ $ curl --verbose --head www.archlinux.org

> HEAD / HTTP/1.1

> User-Agent: curl/7.27.0

> Host: www.archlinux.org

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Sun, 12 Aug 2012 20:59:35 GMT

< Server: Apache

< Vary: Cookie,User-Agent,Accept-Encoding

< Cache-Control: max-age=300

< Content-Length: 23580

< Content-Type: text/html; charset=utf-8

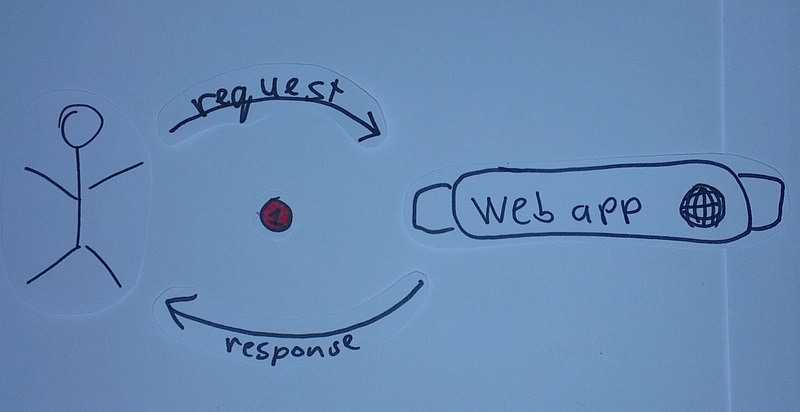

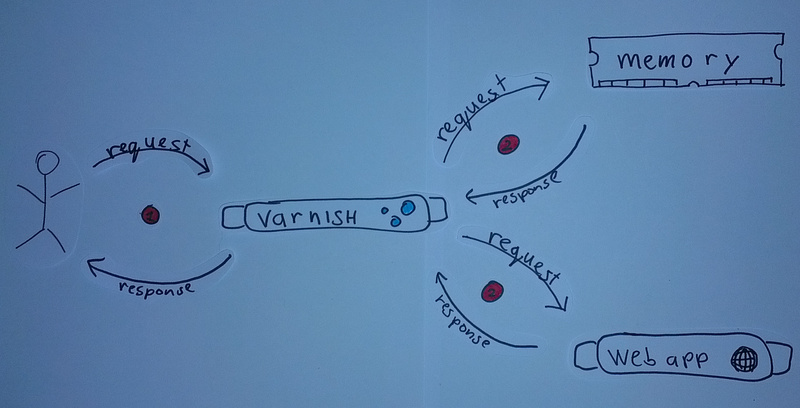

Varnish is a caching reverse proxy which sits between the actual request of the user and the web application it is

caching for. This basically means that every request from the user reaches the server as usual (1), but instead of

hitting the web application the initial request is handled by Varnish. Varnish will then either serve the response from

memory if that's possible or it will proxy the request to the web application (2).

For a caching reverse proxy like Varnish there are two headers in the response of archlinux.org which are more

interesting than the rest. First there is the Cache-Control: max-age=300 header which tells Varnish that the response

may be cached for 300 seconds. Next there is the Vary: Cookie,User-Agent,Accept-Encoding header. The value of this

header tells Varnish that it can indeed cache the response, but it has to keep a different response in cache for each

combination of values of the Cookie, User-Agent and Accept-Encoding headers of the request.

Adding more context

Using the Vary header a web application can instruct HTTP clients to keep different versions of a response based on

properties of the request. For example Vary: Cookie will make sure a different response is kept for each value of the

Cookie header of the request. This makes it possible to have different caches for anonymous users and a different

cache for each user that is logged in and has its own cookie. However it would be nice to add more information to the

request so we can cache on a more granular level.

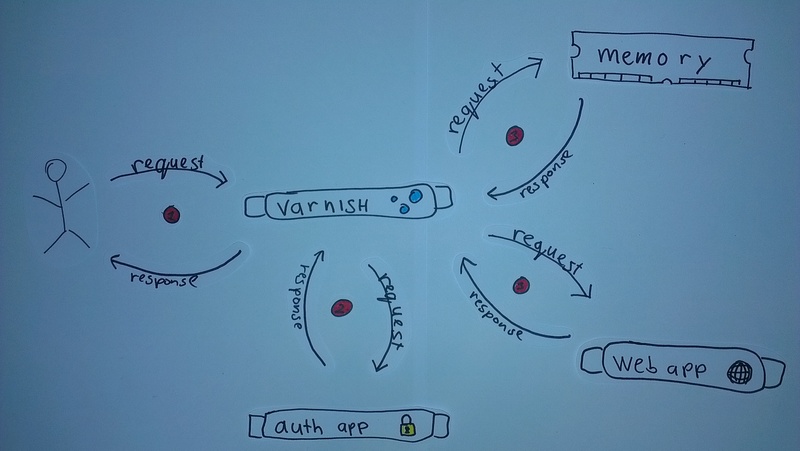

We can add more information to the request by adding another step in our request flow. Before the request from the user

is passed to the application Varnish will try to authenticate the user of the request. With the user authenticated

additional headers such as X-User and X-Group are added to the request. Having these extra headers allows the

application to instruct the HTTP cache to Vary the cache on X-User level or on X-Group level. We can now create a

cache that is shared between multiple users of the same group!

Pre-authenticating users

To authenticate a user and add extra context to the request on the actual web application an extra step is added in the

request flow. The user will still send a request to the server as usual (1), next Varnish will first make a request to a

dedicated authentication endpoint in order to authenticate the request (2) and finally Varnish will do the actual

request on the backend or retrieve the response from memory (3).

Configuring Varnish

In the following example an "api" is assumed where a query parameter ?access_token=foo is used for authentication.

This example Varnish configuration makes use of libvmod-curl which adds curl

bindings to the configuration language of Varnish. [1] Of course the curl part of the example could be swapped for other

implementations calling some other service such as redis.

# The routine handling received requests

sub vcl_recv {

# At first entry, authenticate

if (req.restarts == 0 && req.url !~ "/token.php") {

# Url should contain "access_token"

if (req.url !~ "access_token") {

error 401 "Not authorized";

}

# extract the access token and call a specialized script to authenticate the token

curl.fetch("http://localhost/token.php?token=" + regsub(req.url, "(.*)access_token=([^&]+)(.*)", "\2"));

# check the status code of the response

if (curl.status() != 200) {

error 401 "Not authorized";

}

# Add additional headers to the original request

set req.http.X-Auth-User = curl.header("X-Auth-User");

set req.http.X-Auth-Scope = curl.header("X-Auth-Scope");

set req.http.X-Auth-Group = curl.header("X-Auth-Group");

curl.free();

# Remove the access token from the original url

set req.url = regsub(req.url, "(.*)access_token=([^&]+)(.*)", "\1\3");

}

}

Using two basic PHP scripts as ''web application'' I will try to show how this will work in practice. First there is

token.php which is responsible for authenticating a given token:

<?php

header('Cache-Control: no-cache,private');

// Get "user,group" from the token parameter

list($user, $group) = explode(',', $_GET['token']);

// Add "context" headers as much as you like

header('X-Auth-User: ' . $user);

header('X-Auth-Group: ' . $group);

Next there is index.php which will return different output and vary headers based on the given query parameters. The

output from the script is prefixed with the unix timestamp in microseconds.

<?php

header('Cache-Control: max-age=10s');

if (isset($_GET['shared'])) {

header('Vary: X-Auth-Group');

out("Shared response for " . $_SERVER['HTTP_X_AUTH_GROUP'] . "!");

} else if (isset($_GET['nocache'])) {

header('Cache-Control: no-cache');

out("Never cached!");

} else if (count($_POST) > 0) {

out("Hi " . $_SERVER['HTTP_X_AUTH_USER'] . ", this was a POST request!");

} else {

header('Vary: X-Auth-User');

out("Hi " . $_SERVER['HTTP_X_AUTH_USER'] . "!");

}

function out($line)

{

echo sprintf("[%s] %s\n", microtime(true), $line);

}

With Varnish configured to cache the responses from the scripts using the pre-authentication method described above,

responses from index.php will look like the following:

# Requesting user specific content

~ $ curl localhost/?access_token=a,1

[1348606150.7984] Hi a!

~ $ curl localhost/?access_token=a,1

[1348606150.7984] Hi a!

~ $ curl localhost/?access_token=b,1

[1348606152.8321] Hi b!

~ $ curl localhost/?access_token=b,1

[1348606152.8321] Hi b!

~ $ curl localhost/?access_token=c,2

[1348606154.8652] Hi c!

~ $ curl localhost/?access_token=d,2

[1348606155.8784] Hi d!

As one can see, the response is cached and resend, but a different response is send for each user.

~ $ curl localhost/?access_token=a,1&shared

[1348606026.4899] Shared response for 1!

~ $ curl localhost/?access_token=b,1&shared

[1348606026.4899] Shared response for 1!

~ $ curl localhost/?access_token=c,2&shared

[1348606030.5253] Shared response for 2!

~ $ curl localhost/?access_token=d,2&shared

[1348606030.5253] Shared response for 2!

~ $ curl localhost/?access_token=b,1&shared

[1348606034.5583] Shared response for 1!

This time, the response for the users a and b are the same because they're both part of group 1. Again the responses

are served from cache, but this time they differ based on the group of the user.

~ $ curl -d "foo=bar" "localhost/?access_token=a,1"

[1348606314.1293] Hi a, this was a POST request!

~ $ curl -d "foo=bar" "localhost/?access_token=a,1"

[1348606314.746] Hi a, this was a POST request!

Post requests issued to the server are authenticated. The response of the web application however is not cached.

~ $ curl localhost/?access_token=a,1&nocache

[1348606204.3077] Never cached!

~ $ curl localhost/?access_token=a,1&nocache

[1348606205.3246] Never cached!

For completeness sake there is also an example of a response that is never cached by Varnish.

Summary

- Various ways of handling HTTP caching in your application

- Authenticating users before hitting the actual web application opens new possibilities for HTTP caching

Having the authentication part of the application separated also opens new possibilities such as caching authenticating requests or rate limiting users.